VR pioneer Edward Saatchi: VR films aren’t the future of storytelling

As one of the founding members of Oculus Story Studio, Edward Saatchi has spent years exploring how VR can be used to make narratives more immersive. But with Fable Studio, his latest virtual reality outfit,

Saatchi has come to a surprising revelation: The future of storytelling doesn’t lie in VR movies. That’s an odd but interesting, stance for someone who’s devoted years of his life to the potential for VR narratives. Instead of trying to mimic the experience of films through virtual reality, Saatchi believes there’s even greater potential in designing realistic characters for AR and VR. Imagine a virtual companion that can learn over time and interact with you and your family naturally.

That starts with Lucy in the studio’s upcoming release, The Wolves in the Walls. She interacts with the viewer naturally by doing things like handing you objects and reacting to where you’re looking during a conversation. She can also remember what you’ve done and bring it up later. Since Wolves has been in the works for a few years now (it was originally an Oculus Story Studio project), it’s still a fairly traditional VR narrative. You play as Lucy’s imaginary friend and experience the story fairly linearly. But for Saatchi, it’s the first step toward his dream of the ideal virtual personality.

“We almost feel that we relaunched not as a VR movie studio but a company focused on a really hard technical and creative problem, which is [developing] an interactive character,” Saatchi told Engadget. “Instead of abstracting it from Lucy or from a character who’s seemingly alive and saying, ‘We’re just building tech over here,’ that’s totally wrong.” Simply put, he thinks viewers respond more to actual characters rather than archaic game-engine updates.

Saatchi recruited designers from some of his favorite interactive games, including people who’ve worked on the character Elizabeth in Bioshock Infinite, Ellie in The Last of Us and the canceled but infamously ambitious Steven Spielberg project LMNO. He also brought on Doug Church as a consultant, a designer who worked on LMNO and is well known for developing groundbreaking games like System Shock.

“From the very beginning, I think what set us apart in the studio was that the people leading it all loved video games and narrative games,” Saatchi said. “I think a lot of VR studios out there often were led by people who didn’t [love games]. I’ve been playing narrative games since I was five … When we founded Story Studio, it was the intersection of immersive theater, narrative games and cinema…. I haven’t had as many friends who have a good sense of immersive games, like Gone Home, Tacoma and Virginia.”

In many ways, VR filmmaking today resembles the early days of cinema. Initially, directors tried to replicate their experience in the stage, so they placed a camera down and shot films as if the audience were sitting in the front row of a theater. It took a while for artists to truly understand the power of cinematic language — things like editing and creative camera placement. Similarly, Saatchi thinks much of VR cinema today is trying to replicate what we know from films. Three-hundred-sixty-degree VR videos are indeed more immersive than 2D films, but they still follow plenty of the same rules. And even more-interactive virtual reality experiences often reflect what we know from cinema rather than expand on it.

“For us, four years ago, we were like, this is the place: immersive theater, narrative games!” he said. “With Lost we created the first VR movie with a beginning, middle and end. With Henry, we won the first Emmy [for VR]. Dear Angelica was the first film made in VR [using the app Quill]. I love how unhinged [our work] is from game engines. Looking at it from the point of view of early games, people just making their content and not realizing they were building game engines, I think it’s the same with Dear Angelica. We’re building an engine, but really it’s out of love for telling that particular story.”

“Four years ago, our vision of where [narrative VR] might go was pretty much Wolves, to be honest,” Saatchi added. “Now that we’re actually close to that vision, I don’t think that’s the future.”

Instead, he envisions a “cross platform interactive character” that’s viewable through augmented reality glasses, similar to Microsoft’s HoloLens and Magic Leap’s One. He imagines coming home to find his kids wearing AR glasses and being read to by an AR character, almost like a caretaker. When they want to watch TV, she could sit on the couch with them and respond to what’s on the screen. Saatchi likens it to Joi in Blade Runner 2049 — a character who isn’t quite conscious but is smart enough to interact with you realistically.

“I could see a world where the GUI [graphical user interface] for spatial computing is not Minority Report or even HoloLens,” Saatchi said. “It’s called spatial, meaning 3D. So what do I get information from that’s 3D? People. Instead of the GUI being tied to the mouse and keyboard or a touchscreen, it might be an interactive character, like Alexa and Siri.”

He points to Spike Jonze’s Her as an example — but he’s not as interested in the lead AI, voiced by Scarlett Johansson. (Surprisingly enough, Saatchi is skeptical about “perfect” AI being possible in our lifetime.) Instead, he’s enamored with the tiny, foul-mouthed video game character that steals the film for a few scenes. It seems realistic because it’s a bit subversive and unexpected. Interacting with a character like that is far more seamless than putting on a VR headset and sitting through several experiences, all the while disconnected from the world. Even Saatchi, who’s built a career around VR, describes wearing a headset as being “pretty stressful.”

“When I start to project out, I imagine a writer’s room that’s not trying to build 19-minute chunks of story for movies or even five-year arcs for TV shows,” he said. “Instead, it’s a writer’s room for building one character. The team that created Joi in Blade Runner 2049 had to create massive amounts of story work, memories and anecdotes for her, so you’d feel a sense of vulnerability. I think that might be the most interesting place for storytellers to focus.”

Of course, to make this vision a reality, we need affordable and effective AR and VR glasses. Based on what we’re hearing about Magic Leap’s glasses, it seems like we’re getting close to that future — but it’ll still be a while before everyone can afford them. Saatchi says the AR experiences he’s seen were so realistic that he ended up moving around the space that characters were occupying even after they disappeared.

Looking ahead, Saatchi sees the version of Lucy in The Wolves in the Walls as version 1.0. The next step will be creating an AR version of Lucy, one who can identify and interact with multiple people. (You can see a glimpse of what that character could look like in his company’s promotional trailer, above.) As Fable Studio moves forward, Saatchi also plans to keep the failures of overly ambitious narrative experiments in mind. Nobody wants a repeat of Peter Molyneaux’s Project Milo, after all.

CamSoda can sync your sex doll to an online performer

NSFW Warning: This story may contain links to and descriptions or images of explicit sexual acts.

If you goal is to have sex with someone through the internet, via the medium of a simulacrum of that person in the room, today is your lucky day. CamSoda, Lovense and RealDoll have teamed up to create VIRP, a system offering Virtual Intercourse with Real People. Put simply, it’s much like the teledildonic setups currently used by cam performers, albeit with a big latex doll instead of a little cylinder.

Each member of the collaboration will offer up something different, with RealDoll providing the full body, or torso, according to your specification. Lovense, meanwhile, will integrate its male masturbator technology — available to buy as the Lovense Max — into the doll’s crotch. CamSoda, of course, will act as the broker, connecting users with a mobile VR headset and the gear to a performer with the compatible vibrator.

The biggest issue with constructing ever-more virtual brothels, of course, is the escalating prices, and if you want to take part, it’ll cost you. A baseline RealDoll torso is more than a grand, while custom and full-bodied models can set you back as much as $10,000. Which is why RealDoll’s CEO believes that, in our grand sex robot future, you’ll likely rent, rather than own, the love machines that he creates.

Source: CamSoda

Virgin Media is giving all of its TV customers a V6 box

Virgin Media’s next-generation V6 box is now over a year old. Since its launch, the company has managed to install more than a million of the 4K-ready set-top boxes in UK homes — roughly a quarter of its total customer base. However, with a number of older, less-capable boxes still in use, Virgin Media has come up with a way to drag the remaining three-quarters of subscribers into the present: give away the Virgin V6 box for free.

From today, anyone with a Virgin Media TV and broadband package can apply for an upgrade to the company’s latest and greatest hardware. Gone is the £20 activation fee the company used to charge and so is the need to enter a new contract, allowing subscribers to utilise the V6 until they decide whether they want to renew their custom.

Virgin Media says it will begin contacting eligible customers in the “coming weeks” with details on how to apply for the upgrade. As it utilises the same infrastructure, the majority will be able to swap their old box with the new one without the need for an engineer visit.

The UK Prime Minister is creating an anti-fake news squad

In its most benign form, fake news can simply be clickbait designed to get eyes on banner ads. In other instances — the one’s governments are primarily concerning themselves with — it’s propaganda designed to invoke outrage and sway public opinion on elections and other important decisions. UK Prime Minister Theresa May has previously accused Russia of “seeking to weaponize information” by “deploying its state-run media organizations to plant fake stories and photoshopped images in an attempt to sow discord in the west and undermine our institutions.” And now, she’s taking action by creating a new specialist unit tasked with actively tackling fake news. What we’re not being told, however, is how this anti-misinformation squad is supposed to do that exactly.

As Reuters reports, a spokesperson for the Prime Minister told the media following a meeting of the UK’s National Security Council: “We are living in an era of fake news and competing narratives. The government will respond with more and better use of national security communications to tackle these interconnected complex challenges.”

“We will build on existing capabilities by creating a dedicated national security communications unit. This will be tasked with combating disinformation by state actors and others. It will more systematically deter our adversaries and help us deliver on national security priorities.”

Nice and vague, then…

Just as the US is mulling the possible role fake news and Russia’s so-called ‘troll army’ had in influencing the outcome of the last Presidential Election, so the UK authorities are looking at how the same may have affected the Brexit vote and 2017 general election. While these retrospective reviews move forward, platforms including Google and Facebook — the places most people go to find their news — are taking more responsibility for the part they play as pseudo-publishers. They’re trying to help users sort the wheat from the chaff primarily by highlighting trusted media outlets and by demoting those on the sketchier side.

These attempts at fact-policing aren’t putting the concerns of governments to bed, though. Many US politicians believe regulation is key to fighting fake news; France is drafting new legislation to make sponsored stories more transparent, with state powers to remove factually incorrect news; and Germany recently introduced a law whereby social media companies can be fined up to 50 million euros for failing to dealing with hate speech and fake news on their platforms.

In the UK, the response so far has merely been noncommittal talks of “sanctions” social media sites might face for hosting fake news. But now we’re getting real action in the form of a crack team that will ‘combat’ the issue and ‘deter our adversaries,’ whatever that could possibly mean. It sounds suspiciously like hot air the government is bellowing — taking the buzzword of fake news and promising that it’s actively doing, er, something about it.

Source: Reuters

Facebook buys a company that verifies government IDs

Facebook has snapped up a software firm that created tools allowing startups to instantly authenticate driver’s licenses and other government IDs. The social network said that Boston-based Confirm’s “technology and expertise will support [its] ongoing efforts to keep [its] community safe.” According to Reuters, the smaller company’s 26-or-so employees are joining Facebook, which makes sense, considering Confirm has already shut down its office and software offerings. However, the acquisition’s terms remain unclear, and neither side has revealed how the social network will use the ID verification technology.

Before you panic and think that Facebook will ask for your ID in the near future, note that Confirm’s technology has other potential applications. Reuters notes that the acquisition is “a step that may help the social media company learn more about the people who buy ads on its network” since as it is, all you need to buy ads is a credit card. If you’ll recall, Facebook admitted last year, that Russian troll farms purchased tens of thousands of ads — divisive materials meant to exploit US social divisions — on its network before, during and after the US Presidential Elections. A verification procedure could prevent the purchase of ads linking to fake news Pages.

Another possible application is to authenticate identities of users who get reported for using names other than their legal ones. A few years ago, Facebook deleted accounts belonging to drag queens, Native Americans and other people over its real name policy. The social network has since changed that rule to allow “authentic names” — a name someone goes by, even if it’s not their legal one — and has imposed stricter rules when using the fake name reporting tool. Facebook could also use Confirm’s technology to verify the identities of people locked out of their accounts. We’ll know for sure once the social network announces new features, for now, Confirm only has this to say:

“When we launched Confirm, our mission was to become the market’s trusted identity origination platform for which other multifactor verification services can build upon. Now, we’re ready to take the next step on our journey with Facebook. However, in the meantime this means all of our current digital ID authentication software offerings will be wound down.”

Source: Reuters, TechCrunch

Taiwanese Apple Suppliers Face Falling Stock Prices Amid Ongoing Concern Over Weakened iPhone X Demand

Three major Apple suppliers faced falling stock prices on the Nikkei Asia300 Index today, believed to be directly related to “concerns over demand for iPhone X.” The three Taiwanese suppliers were Largan Precision, Hon Hai Precision Industry (Foxconn), and Taiwan Semiconductor Manufacturing Company, dropping 4.4 percent, 1 percent, and 3 percent on the index, respectively.

iPhone X demand concerns and decline in supplier stock prices came after the latest analyst report by JP Morgan yesterday, predicting “slashed” iPhone X orders in the first part of 2018. In a research note reported by CNBC, analyst Narci Chang said “high-end smartphones are clearly hitting a plateau this year,” singling out Apple by forecasting that iPhone X manufacturing “might be down 50 percent quarter-over-quarter.”

Reports of “weakened” iPhone X demand heading into 2018 began emerging late last year, mainly stemming from analyst belief that the high price of the device would eventually lead to reduced sales after early adopters got their iPhone X. These reports have caused several Apple suppliers to be anxious over low order visibility for the full range of iPhone 8, iPhone 8 Plus, and iPhone X models in Q1 2018. CLSA analyst Nicolas Baratte argued that the reported reduction of the iPhone X’s Q1 2018 shipment forecast from 50 million units down to 30 million units “remains inflated.”

Despite multiple stories about the iPhone X’s plateaued demand in early 2018, the smartphone is believed to have sold well following its fall launch in 2017 and throughout the holiday season. Research data shared just yesterday by Canalys reported that Apple shipped 29 million iPhone X units in Q4 2017, making the device the “world’s best-shipping smartphone model over the holidays.”

Earlier in January, Kantar Worldpanel said that the iPhone X saw “stellar” performance in several countries during November of last year, though it was outsold by the iPhone 8 and the iPhone 8 Plus in the United States. Combined, Apple’s three new iPhones captured the top spots for best-selling smartphone models during the month. Kantar’s global OS data pointed towards “staggering” demand for the iPhone X in China from users said to be switching sides from rival smartphone makers.

We should get a better view of how the iPhone X sold soon, when Apple reveals its earnings results for the first fiscal quarter of 2018 on Thursday, February 1.

Related Roundup: iPhone XTags: TSMC, Foxconn, nikkei.com, LarganBuyer’s Guide: iPhone X (Buy Now)

Discuss this article in our forums

iOS 11.3 Will Support Life-Saving Feature That Sends an iPhone’s Precise Location to Responders in Emergencies

Apple today previewed what to expect in iOS 11.3, including new Animoji, health records, ARKit improvements, the ability to turn off Apple’s power management feature on iPhone 6 and newer, and much more.

At the very bottom of its press release, Apple also briefly mentioned a potentially life saving feature coming in iOS 11.3: support for Advanced Mobile Location [PDF] in countries where it is supported.

Additional iOS 11.3 Features: Support for Advanced Mobile Location (AML) to automatically send a user’s current location when making a call to emergency services in countries where AML is supported.

Advanced Mobile Location will recognize when an emergency call is made and, if not already activated, activate an iPhone’s GPS or Wi-Fi to collect the caller’s precise location information. The device then sends an automatic SMS to the emergency services with the caller’s location, before turning the GPS off again.

Advanced Mobile Location is allegedly up to 4,000 times more accurate than current emergency systems, which rely on cell tower location with a radius of up to several miles, or assisted GPS, which can fail indoors.

Advanced Mobile Location must be supported by carriers. EENA, short for the European Emergency Number Association, said the service is fully operational in several European countries, including the United Kingdom, Estonia, Lithuania, Austria, and Iceland, as well as New Zealand, on all mobile networks.

EENA said AML has saved many lives by more accurately pinpointing a person’s position. Accordingly, several minutes of time can be saved, according to the European Telecommunications Standards Institute:

Ambulance Service measurements show that, on average, 30 seconds per call can be saved if a precise location is automatically provided, and several minutes can be saved where callers are unable to verbally describe their location due to stress, injury, language or simple unfamiliarity with an area.

A few years ago, Google implemented a similar AML-based solution called Emergency Location Service into Google Play services that automatically works on Android devices running its Gingerbread operating system or newer.

EENA called on Apple to support Advanced Mobile Location last August, and starting with iOS 11.3 this spring, its wish will be fulfilled.

Related Roundup: iOS 11Tag: iOS 11.3

Discuss this article in our forums

YouTube reportedly curbing musician criticism with promotion deals

YouTube has always had a rocky relationship with the music industry, and the struggle looks set to continue following reports that the video streaming service is effectively bribing artists to keep their criticisms to themselves. According to sources cited by Bloomberg, YouTube has given a number of musicians several hundred thousand dollars for promotional support, on the promise that they don’t say negative things about the site.

Such non-disparagement agreements are common in business, but as the sources cited note, YouTube’s biggest direct competitors in the music biz don’t use them. It seems that YouTube is taking extra precautions to preserve itself following a number of fall-outs with the industry, which has condemned the site for its meager revenue-sharing and failure to police the misuse of copyrighted material.

The company is trying to make amends, though. In December it partnered with Sony and Universal for a new music subscription service and has just launched unified Official Artist Channels to improve the fan experience. It’s also likely that music execs are already well aware of YouTube’s “please don’t be mean about us” caveats, but the fact that it’s out in the public sphere isn’t going to improve YouTube’s image, which is what it was trying to preserve in the first place.

Source: Bloomberg

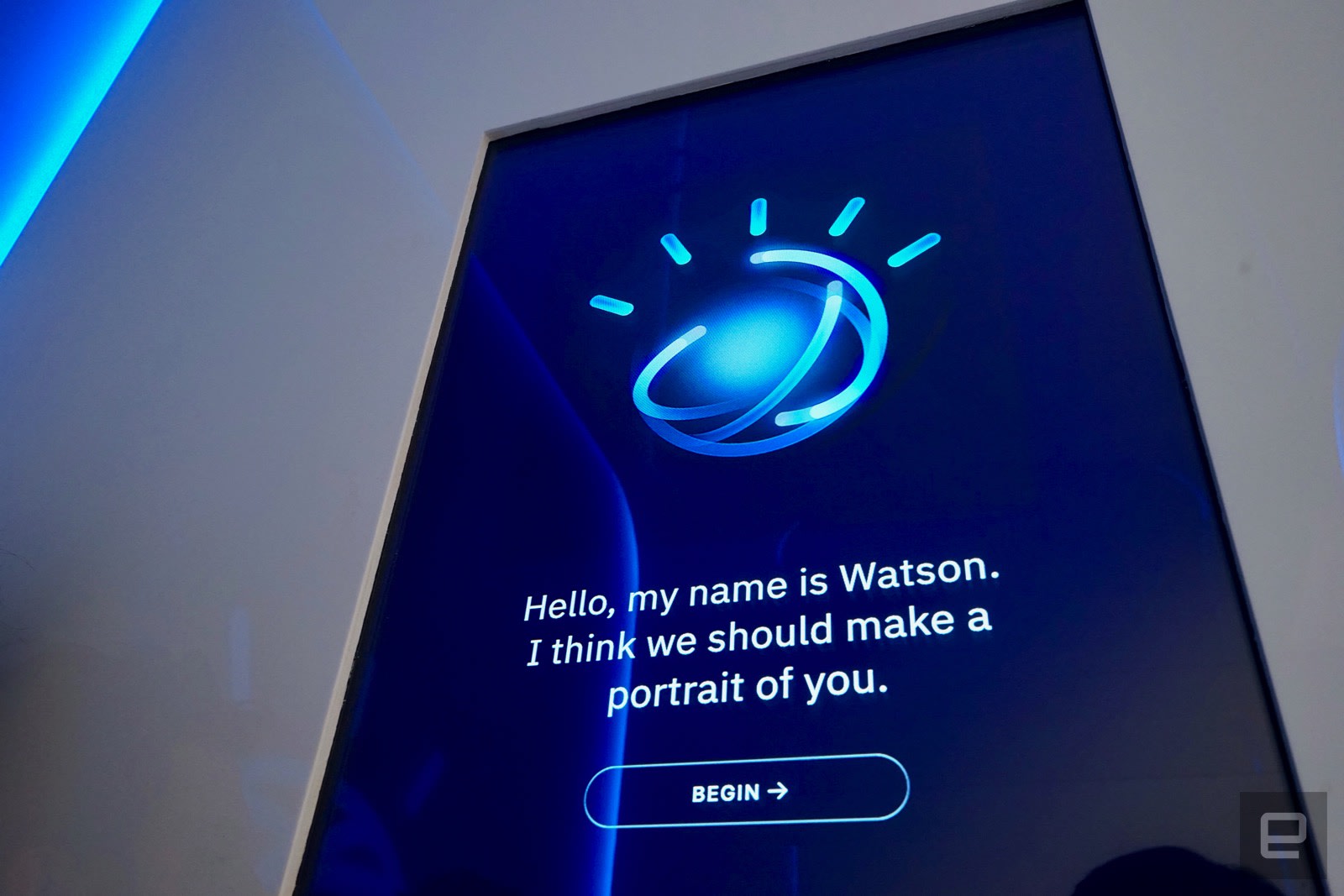

IBM is sending Watson to the Grammys

After winning Jeopardy and designing cancer-treatment plans, IBM Watson is now strutting off to the red carpet of the 60th Annual Grammy Awards. The tech giant’s versatile AI system will be curating and distributing award-show content and images of everyone’s favorite music stars in real time, straight from the red carpet to people’s social media feeds.

IBM and the Recording Academy announced their partnership to use the Watson Media suite at the Grammys today. Want to quickly see who has the coolest looks on the red carpet? Want to gain AI-generated insights into the “emotional tone” of songs by this year’s nominees? Watson’s got you covered.

Behind the scenes, Watson’s AI will be used to help streamline the academy’s digital workflow. In the past, the Grammys relied on third-party photography services to aggregate and distribute images from the five-and-a-half-hour red carpet pre-show. This process is cumbersome and much too slow in a social-media-fueled era of instant gratification. But with thousands of photos taken at the red carpet each year, manually sorting, tagging and tweeting is pretty much a nonstarter. So Watson is stepping in to make the Recording Academy’s life much easier.

“At the end of the day, it really boils down — especially on Grammy Sunday — to making sure the fan experience is as deep and as engaging as it possibly can be,” Evan Green, the Recording Academy’s chief marketing officer, told Engadget.

Green added that the academy’s partnership with IBM offers an opportunity to use Watson’s ever-expanding platform of services to synthesize all the information coming out of the red carpet in a digestible way. It’s just the latest example of IBM using Watson to enhance fan experiences for major live events. For instance, Watson’s capabilities were used to generate tennis highlight videos at the US Open last year.

For Grammy night, IBM created a system that ingests red carpet images from Getty and analyzes them in real time to identify the people in the photos, the colors of the outfits being worn and the people’s emotions. This information is then added to the metadata for each image as the Academy sends them out to the media and through its own social channels.

Watson will also cross-reference outfits worn this year with looks from red carpets past, giving fans a connection to some of their favorite moments in the show’s history, said John Kent, the program manager for IBM Worldwide Sponsorship Marketing.

The company’s Watson Tone Analyzer API will also analyze the lyrics of nominated tunes and albums into five categories: joy, fear, disgust, anger and sadness. As with the red carpet looks, these will be compared to past music from this year’s nominees, Kent added.

IBM and the academy launched a website where fans can check out these features over the course of Sunday’s red carpet show. The goal is to make watching the Grammys feel more interactive for viewers, said Noah Syken, vice president for sports and partnerships at IBM. By digging into past awards looks and getting a better understanding of the musicality of nominated songs, fans can have a better understanding of this year’s nominees and where they fall in the Grammys’ storied pop culture history.

So, what is the development process like for adapting Watson’s capabilities to an event like this? For Kent, it involves an assessment of what Watson can and cannot do, and how it could be most useful to a client like the Recording Academy.

“We do an overview of what Watson is and what Watson isn’t. What are the challenges? What is the audience? The big challenge with the red carpet is ‘how can we help the Recording Academy deal with the hundreds of thousands of photos taken on the red carpet?’” Kent said. “So in developing programs, we always make sure we are checking efficiency boxes and checking fan-experience boxes and making those happen.”

It essentially boils down to “getting the most value out of the data” being gathered, Syken added.

Kent said that just as with serving up real-time highlights for US Open fans, Watson’s visit to the Grammy red carpet offers a template for how this kind of data analysis could be used for less-splashy projects.

While this time Watson is being used to contextualize viral pop culture moments, Kent stressed that the supercomputer’s suite of programs can be applied to any number of businesses. In the past, Watson’s ability to aggregate and interpret large swaths of data has been used to create innovative recipes and even diagnose a rare form of leukemia.

“It’s important for Watson to help real businesses, and that’s really what we are doing here,” he said. “The Grammys happen to be a great cultural experience, but it all goes back to ‘how is Watson going to drive new value for partners and clients? How will they be able to harness insights from all these photos, for instance, to create all these new experiences? To create meaningful experiences?’”

When Watson first won Jeopardy in 2011, the capabilities of artificial intelligence weren’t obvious to much of the public. But after its time in the spotlight on a game show stage, suddenly what once felt like science fiction became accessible. Flash forward seven years and Syken said that partnerships like this one at the Grammys continue to underscore just how useful AI can be. We’ll have to wait and see where Watson goes next.

Plex jumps into VR on Google Daydream headsets

Last year Plex’s reach extended to cover live TV and automobiles, so in 2018 the company is jumping into yet another platform: virtual reality. As it has done before, the company brought in the developer of a community project, Plevr, to work on the new client and Plex VR looks like a nice addition. The content browsing experience I saw in a short demo looked excellent. It really made us of the virtual space with touches like selecting a title popping it out into a virtual DVD case that users could hold, rotate or put down on a table in front of them while they flipped through other options.

That interactivity goes along with comfort as two of three pillars marketing VP Scott Hancock discussed, and comfort comes into play as users can adjust the screen’s size or position at any time. Want to lay down and watch it on the ceiling of a swanky apartment instead of a wall, in a blank void or in a drive-in? It’s all possible. In the apartment environment, for example, when video pauses, the lights come on and shades come up mimicking real-world smart homes.

Plex VR social viewing

Plex

The third pillar may be the most important for adoption, and that’s social. Plex VR allows for up to four users at once, who can easily watch video streaming from a Plex Media Server while seeing each other’s avatars, voice chatting or using the Daydream controller to toss things around and point out things on-screen.

So far, many big media VR apps haven’t included a social element, but for Plex owners who already have well-stocked media libraries, it could be an easy way to share viewing across any distance. The social setup includes a friends list with online statuses similar to PlayStation or Xbox Live, although of course, you can appear offline if you’d rather sit down for a solo session.

Plex VR is available for free, although for now, it’s only out on Daydream VR headsets. To dive into the social features will require at least one of the four users to have Plex Pass, however, users can try it out for free for a week even without that.

Source: Plex Blog