Apple adds 13-inch MacBook Pro to its list of refurbished options

Why it matters to you

If you’ve been waiting to pick up a 13-inch MacBook Pro with Touch Bar but the price was holding you back, then consider a refurbished unit.

The most important new feature introduced with the 2016 MacBook Pro was the new Touch Bar OLED touchscreen. The new input mechanism is Apple’s answer to the touch display and is being used by more and more developers to provide task-based virtual function keys to popular MacOS applications.

The downside of the new machines is their price, with even the entry-level MacBook Pro 13 with Touch Bar coming in at a significant premium. One alternative for anyone who wants the new machine but can’t quite fit it into a budget is to pick up an Apple refurbished machine, which now includes the 13-inch MacBook Pro with Touch Bar, as MacRumors reports.

The other members of the new MacBook Pro line were added to Apple’s refurbished lineup last month. Savings on those machines are considerable, with the 15-inch MacBook Pro with Touch Bar that usually costs $2,399 being available as a refurb for $2,039. Other configurations offer up similar savings.

Now, the 13-inch MacBook Pro with Touch Bar rounds out the list of available refurbished machines. The list changes constantly, and so you’ll need to check on your desired configuration when you’re closer to making your purchase, but here are a few examples of the savings you can enjoy:

- 13-inch MacBook Pro with Touch Bar, 2.0GHz dual-core Core i5, 8GB LPDDR3 RAM, 256GB SSD, Intel Iris Graphics 550: Normally $1,799, refurbished price $1,529

- 13-inch MacBook Pro with Touch Bar, 2.9GHz dual-core Core i5, 8GB LPDDR3 RAM, 512GB SSD, Intel Iris Graphics 550: Normally $1,699, refurbished price $1,999

- 13-inch MacBook Pro with Touch Bar, 2.9GHz dual-core Core i5, 16GB LPDDR3 RAM, 512GB SSD, Intel Iris Graphics 550: Normally $2,199, refurbished price $1,869

As always, Apple stands behind its refurbished machines with its usual one-year limited warranty, and you can give your refurbished choice a try with the security of Apple’s standard 14-day return policy. These are the same policies that are applied to Apple’s new retail units, with the added benefit that refurbished machines go through an extensive checklist to make sure they’re in good shape.

Again, the Apple list of refurbished machines changes regularly, and so you’ll want to keep tabs on what’s available as you get closer to pulling the trigger. And in the meantime, you can start your planning process with the knowledge that you’ll be saving some serious cash by picking up a lightly used 13-inch MacBook Pro with Touch Bar at some serious savings.

More Live Photos will be in more places following Apple’s release of the API

Apple’s Live Photos will be coming to more apps, thanks to the recent release of the feature’s API. Apple recently shared the code behind the living pictures, allowing third-party developers to integrate them into their apps and websites.

Live Photos launched in 2015 with the release of the iPhone 6S. Live Photos are both a still photo and a video in one format. The file type is shared as a picture, but when the image is tapped, a three-second video plays. That’s because when shooting the live photo, the camera also captures what happens 1.5 seconds before and 1.5 seconds after the image is taken.

While the feature has been around for almost two years, few programs support the upload of Live Photos, leaving fans of the feature with few ways to actually share the photo-and-video-in-one format. Without access to the API code, few have figured out how to enable the feature, although both Tumblr and Facebook already support them.

With the release of the API, developers have access to parts of the Live Photo code, which allows them to support the feature. Once third-party developers integrate the API onto their websites, Live Photo fans will have more places to share their short video clips.

The new Developer Kit will allow app designers to add Live Photos compatibility when crafting applications for iOS, MacOS and tvOS. For web designers, the Java-based LivePhotosKit will allow compatibility with more web applications.

Live Photos is now the default mode on iPhone 6S and later if the feature isn’t switched off, with the same editing options for stills available from Apple’s Photo app. Now, when live images are shared in supported apps, they’ll have the same tap-to-play as they do inside the Apple’s native apps. While it’s unclear which apps will adapt the feature and when, the release of the API makes it easier for more apps to support the feature.

From automated cars to AI, Nvidia powers the future with hardcore gaming hardware

At most companies, technology takes a trickle-down approach. New innovations first target big companies and governments, then eventually find their way to home users. That’s not how things work with Nvidia. The company is mostly known as a graphics card maker — deservedly so, since at the last count it controlled as much as a 70 percent of the add-in card market. Where it stands apart among its contemporaries, though, is in taking the same underlying technology that powers its most impressive graphics cards, and applying it to other tasks.

In recent months, Nvidia has pushed itself as a company at the forefront of ‘edge’ AI development, which concerns local algorithmic processing and analytics. Nvidia considers itself uniquely positioned to be a pioneer of that burgeoning technology space, and the provider for much of its future hardware, thanks to its consumer graphics developments.

To find out more about Nvidia’s vision for the future, we spoke with the company’s vice president of sales and marketing in Europe, Serge Palaric. He talked us through the green team’s plan for a future that ranges from traditional gaming hardware to cutting-edge AI development.

Bringing AI to the user

Despite the added pressure off AMD’s upcoming Vega graphics cards, Nvidia is not looking to narrow its approach. “All technology is linked to deep learning and AI,” Palaric said.

“We’re trying to make computers understand the real world, without simulations.”

Nvidia made its commitment to AI clear with the recent announcement of its second-generation Jetson TX hardware, a localized AI processing platform that Nvidia thinks will popularize the idea of what it calls “inference at the edge.” The goal is to provide the parallel computing ability needed to make complicated algorithmic choices on the fly, without cloud connectivity.

Many contemporary technologies, from autonomous cars, to in-home audio assistants like Amazon’s Echo, use AI to provide information or make complicated decisions. However, they require a connection to the cloud, and that means added latency, as well as reliance on mobile data. Nvidia wants to bring equivalent processing power to the local level, using the parallel processing capabilities of its powerful graphics chips.

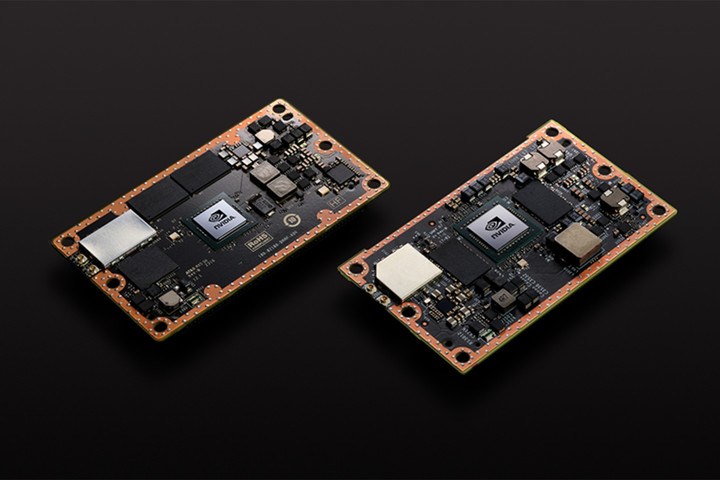

Nvidia Jetson TX2 and TX1

“You have to have a powerful system with adequate software to handle the learning part of the equation,” Palaric said. “[That’s why] we launched the TX1. It was the first device that could handle that sort of learning [locally].”

Combining a powerful graphics processor alongside multiple processor cores and as much as 8GB of memory, the Jetson TX1 and its sequel, the TX2, are Nvidia’s front-line assault on the local AI supercomputing market. They offer powerful computing on a single PCB, meaning that no data needs to be sent remotely to be processed.

The TX2 was only recently announced, but represents a massive improvement over the TX1. Built to the same physical dimensions as its predecessor, it offers twice the performance and efficiency, thanks to the introduction of a secondary processor core, a new graphics chip, and double the memory.

More importantly, each Jetson board measures just five inches in length and width, they can fit in most smart hardware.

Nvidia Jetson TX2

“Say you are building a smart camera and using facial recognition software, and you want to have an answer right away,” Palaric said. “Having a part of the deep learning inference inside the camera is the solution. You can download the dataset inside your TX1 or now TX2, and you don’t have to wait to get an answer from the datacenter. We are talking a few milliseconds, but having the AI at the edge helps make things happen immediately.”

Nvidia’s embedded ecosystem and PR manager for the EMEA region, Pierre-Antoine Beaudoin expanded on Palaric’s point. “In something like voice recognition for searching on Youtube, it makes sense to send the voice through the cloud, because the action of pushing the video through search, will happen on the cloud,” he said. “When it comes to a drone or self-driving cars though, it’s really better to have the action taken by the AI locally because of the reduction in latency.”

Graphics powered vehicles

Autonomous driving is a major avenue of interest for Nvidia, and several of its new hardware packages are designed specifically with it in mind. While many of the world’s auto manufacturers and technology companies are developing their own driverless car solutions, Nvidia wants to be the hardware provider of choice, and Palaric believes it’s in position to fill that role.

Although a different platform than Jetson, Nvidia’s autonomous car-focused Drive PX 2 is still built on the foundation of a Tegra processor, the same kind found in Jetson hardware and many consumer devices, like the Nintendo Switch and Nvidia’s own Shield tablet. The use of Tegra in all these devices demonstrates how deeply the green team’s consumer, enterprise, and research interests are linked.

“With the Drive PX 2, we have a platform that we can provide to tier one OEMs. If you look at what we showed off at CES this year, we demonstrated autonomous driving with only a single camera,” Palaric said.

“We are trying to bring one platform that will […] replace all black box and infotainment hardware inside the car.”

While it seems likely that multiple cameras and sensors will be used by the first generation of truly autonomous vehicles, masses of computing power will be required to process all that information, and Nvidia wants to provide the hardware to makes it happen locally.

The Drive PX2 is designed, Palaric said, to show off the capabilities of its planned Xavier platform. That system will have all the power of the PX2 in one chip. By having an idea of what the hardware capabilities will be so far in advance, Nvidia hopes to make it easier for car manufacturers to adjust their designs accordingly. Nvidia is doing the same with its Jetson TX2, as it has the same exact physical dimensions as the TX1. That makes it much easier for hardware manufacturers to plan their designs around known capabilities and form factors.

Nvidia Drive PX2

As Palaric highlighted, the auto-industry tends to have long development times of four years or more for new vehicles. Having an evolving but predictable platform like Drive PX2, and eventually Xavier, means that they can plan for advances, rather than build old technology into the vehicles.

“This is where we are different,” Palaric said. “If you look at most modern cars, they have many different devices that do one task. We are trying to bring one platform that will be able to handle all of the new deep learning tasks, as well as replace all black box and infotainment hardware inside the car.”

“We are also providing a ton of software to help the car manufacturers to develop their own software based on our hardware platform.”

Once again Beaudoin had a concise way to encapsulate the problem Nvidia is trying to solve with its hardware. “For the past 25 years we have been focusing our efforts on simulating the real world in a computer. Now, what we’re trying to do is make computers understand the real world without simulations.”

Consumer graphics ‘drives’ it all

If this conversation has left you concerned that Nvidia may be doing all the above at the expense of its graphics card developments, don’t worry. Palaric made it very clear these advances don’t come at the expense of future graphics improvements. While desktop PC industry was contracting every year, gaming has continued to grow, and remains the foundation of the company.

“Our consumer business is growing a lot,” Palaric said. Further, it’s Nvidia’s GPU advancements that drive everything else. “We use the same GPU architecture in consumer in automotive, and other industries.”

“When we launched Pascal in July 2016, seven months later we could include Pascal architecture in TX2, so the difference between what we are doing at the consumer level and at the extreme in other markets, is lessening all the time.”

Please enable Javascript to watch this video

We did press Palaric on when we might expect to see the much promised HBM memory show up in Nvidia graphics cards. Unfortunately, he wouldn’t say much about the next-generation of consumer graphics hardware, but did tell us that we would “know soon.”

When asked about the difficulties Intel has faced developing 10nm parts, and whether as Nvidia approaches that die size it may run into similar problems, he didn’t seem phased.

“Intel has a challenge with 10nm,” he said, and suggested it would likely be one faced by most typical manufacturers. However, it’s not something he’s too concerned with. While he wouldn’t go into any detail about Nvidia’s plans for future GPU generations, he did say that few people were concerned about the size of Nvidia’s next die.

“Nobody is asking me what size our next GPU will be. They’re asking how they can use our technology to solve their problem,” he said.

Today’s graphics will be tomorrow’s AI

“We are at the start of deep learning and AI,” Palaric said. “It’s moving from universities and research centers into the enterprise world, and that is why we are putting a lot of energy into figuring out what technology people need to achieve their goals.”

In a move that seems likely to benefit us all, whether hardware enthusiast or future autonomous technology user, Nvidia plans to continue driving commercial graphics development to improve performance and efficiency, leading to more advanced local algorithmic processing in the future.

“The more powerful GPU we have, the more powerful things we can do in the consumer space, and that technology leads us to new innovations in deep learning and AI,” Palaric said.

Just as gaming is helping pave the way for the future of virtual reality, it may provide the hardware needed to make local AI possible, too. The next time you’re fretting over an insatiable love of graphics cards, remember that seeking maximum framerates in Fallout 4 also means seeking more capable automated cars, and smarter AI. It’s the same technology under the hood, after all.

Microsoft rolls out Focused Inbox for Gmail accounts in Windows 10 Mail app

Why it matters to you

Your Gmail account will be more easy to manage with the addition of Focused Inbox support in the Windows 10 Mail & Calendar app.

Microsoft has spent tremendous time and energy on improving Windows 10, resulting in the recently released Creators Update and continuing with work on the next major update, Redstone 3. Along the way, the company has also worked to improve its first-party Windows 10 apps.

One of the most important is the Mail & Calendar app, which is the default mail app for Windows 10 users and still needs some work to be as good as Microsoft’s Outlook Mobile for iOS and Android. Today, the company announced expanded support for Gmail accounts, which will go a long way toward making the Mail & Calendar app more useful for more Windows 10 users.

The main update that Microsoft is introducing to the Mail & Calendar is Focused Inbox support for Gmail. Focused Inbox uses machine intelligence to guess which messages are most important and then places them under a “Focused” tab in an account’s inbox. Emails that the system considers nonessential are shunted to an “Other” tab, to let users spend their time on only the most important items. Additional Gmail support includes faster and better search and the ability to track travel and package information.

Focused Inbox other enhanced functionality has been available for Outlook.com accounts and Office 365 email addresses for a few weeks, and now it’s rolling out for Gmail accounts as well. It’s not available for everyone quite yet, and Windows Insiders will be the first to get a notification when the new functionality arrives for them. The notification will ask users for permission and then the Mail & Calendar app will run a synchronization and get everything up to speed.

By expanding the Focused Inbox and other features to Gmail accounts, Microsoft is a step closer to bringing feature parity to the Windows 10 Mail & Calendar app, which is suffered from a relative dearth of features compared to the Outlook Mobile apps for iOS and Android. Given Microsoft’s cross-platform focus, that’s a good thing, as it means that a user can feel more comfortable running a Windows 10 PC to go along with an iPhone or Android smartphone.

As always, Microsoft wants feedback on the new functionality and anyone who loves or hates the new Focused Inbox functionality can simply go to “Settings,” then “Feedback” in the Mail & Calendar app to let the company know their thoughts. Again, the Gmail account support won’t roll out immediately, and so keep an eye out for that notification to get your Gmail messages more focused.

HTC’s upcoming flagship will be called the HTC U 11

The new name signifies HTC’s strategy for streamlining its mobile branding.

We’ve already seen what HTC’s latest flagship will look like, and today we learn its name — the HTC U 11. This info comes from Evan Blass of VentureBeat who spoke with an individual briefed on the company’s plans who also confirmed that the new device will be available in five different color options.

Previously known to the world as the HTC Ocean, the new name seems to signifies a shift in HTC’s strategy for branding its mobile devices — the number obviously denotes that this is a follow-up to last year’s flagship, the HTC 10, while adding the “U” brings the phone’s branding in line with the latest phones released by HTC — The Taiwanese company has already released two phones this year, the HTC U Ultra and HTC U Play in 2017.

While we’re sure to hear more leaks in the coming weeks before its May 16 launch event, we do know a fair bit about this new phone already. In terms of specs, the phone will sport a 5.5-inch QHD display powered by Snapdragon 835 with 4GB of RAM, a 12MP camera, 3000mAh of battery life and probably no 3.5mm headphone jack — pretty standard stuff. But what might set the HTC U 11 apart from the rest of the pack is a unique feature that HTC seems quite excited about: a touch-sensitive frame that will allow you to squeeze the sides of your phone to perform specific actions such as launching an app or snapping a photo.

We’ll have to wait until we’re hands-on to see how well that feature jibes with everyday use, but we want to know what do you think of the HTC U 11. Let us know in the comments below!

These are the first five things you should do with your Samsung Gear VR

You’ve got a new Gear VR! Now what?

Your shiny new Samsung phone came with a headset capable of immersing you in some really cool games and videos, but there’s a lot more to the Samsung Gear VR. This is basically a portable entertainment center, and if you’re prepared for the best possible experience you’re going to get it. All you need to do is make sure you’re set up to have a lot of fun in VR, which isn’t all that different from having fun anywhere else.

Here are some quick tips for setting up your Gear VR for maximum fun times.

Not sure how to assemble your Gear VR? Check out our guide!

Get some headphones

Just about every Gear VR experience will tell you it is best with headphones, and with good reason. Headphones make it much easier for you to feel immersed in the experience you are seeing, by making it so you hear things from every direction just like you would in that other kind of reality that doesn’t require a headset.

If you got your Gear VR for free with a Galaxy S8, your phone came with a set of decent earbuds that are perfect for this experience. If you’re looking for some better options, we’ve got a few for you to choose from.

The best headphones for your Samsung Gear VR

Create an Oculus Avatar

The Oculus App will grab your Facebook profile picture as the acting default for your Oculus profile, but there’s another option that is way more exciting — Oculus Avatars! These are more dynamic, often silly versions of yourself you can dress up as you please, and they move around in VR synced up to your head movements.

Oculus Avatars can be used in any app, which means you will run into apps and games on your Gear VR where other people will be able to see and interact with you as your Oculus Avatar. Grab the Avatar Editor app from Oculus and have some fun with this, so you can go be a part of the fun later.

Read more: How to set up your Oculus Avatar

Set up a payment method

You don’t have to buy apps and games from Oculus, and in fact there are plenty of great free experiences to be had from within your Gear VR. Even if you don’t think you’re ever going to pay for apps in your Gear VR, you should probably add a payment method to your account. Having one already loaded makes it possible for you to quickly buy just by entering your pin, so you can go from seeing something cool to actually using it in seconds instead of minutes.

The Oculus Store lets you use a bunch of different cards, or you can add your PayPal account if you’re not a fan of having your card stored somewhere. Either way, this is absolutely something you should do before you get too far into your Gear VR experience.

Change the notification settings

Your Gear VR is set up to pass notifications from your phone to the Gear VR, so you get a little pop up in VR when someone texts or calls you. Unfortunately, there isn’t much you can actually do with these notifications while you are wearing the Gear VR, so they mostly just interrupt your game.

Oculus lets you disable these notifications entirely so you aren’t interrupted, and it’s a good idea to consider using this feature. It keeps you distraction free in VR, which is important if you’re watching a video or deep in a game.

How to adjust Gear VR notifications

Check out the Events calendar

Without even putting your phone into the Gear VR, you can check and see what major events are happening in your favorite VR apps. The Oculus App has an events tab, which lets you mark yourself as interested in a particular event and notifies you when that event gets closer.

These events range from in-game specials to live performances and even big sports days, so remember to scroll through it as often as possible to avoid missing anything fun!

Looking for more? Check out the VR Heads Ultimate Guide for your Gear VR!

Hulu eyes Joe Hill’s ‘Locke & Key’ horror comic for new series

After a few false starts, Joe Hill and Gabriel Rodriguez’s award-winning horror comic Locke & Key is getting another shot at coming to your screen. Hulu is producing an hour-long pilot adaptation with Lost’s Carlton Cuse executive producing and Doctor Strange director Scott Derrickson filming it.

I’m directing the @joe_hill TV pilot adaptation of his own amazing graphic novel for Hulu and producer @carletoncuse pic.twitter.com/7J6PzffSvk

— Scott Derrickson (@scottderrickson) April 20, 2017

The comic follows three siblings of the Locke family returning to their ancestral home in Lovecraft, Massachusetts, after their father is brutally murdered. There they explore a house with doors unlocked by magical keys that bestow powers…and a malevolent demon determined to steal them all for itself.

Cuse developed the pilot while Hill wrote its script. The son of horror icon Stephen King, Hill wrote several best-selling novels (Heart-Shaped Box, NOS4ATU, The Fireman) before embarking on Locke & Key with artist Rodriguez, which took home a couple British Fantasy Awards and an Eisner, comics’ Oscar-equivalent.

The first pass at a Locke & Key adaptation was six years ago, when Fox ultimately passed on a pilot backed by producer pair Roberto Orci and Alex Kurtzman and starring Mirando Otto and Nick Stahl. Amazon’s audiobook service Audible adapted the series into a 13-hour radio drama in 2015 with its own impressive cast, but here’s hoping Hulu’s take is the small screen version the comic’s fans have been waiting for.

Via: The Verge

Source: The Hollywood Reporter

Twitch kicks off Science Week by streaming Sagan’s ‘Cosmos’

Prepare to see all kinds of science-y streams on Twitch next week. The streaming platform is holding a week-long celebration of all things science, starting with a marathon of Carl Sagan’s Cosmos: A Personal Voyage. Twitch’s Cosmos channel will broadcast all 13 episodes of the series twice — the first one will begin on April 24th, 3PM Eastern, while the second run will start airing on April 27th, 5PM Eastern. Creators will also be able to co-stream the show, though, so check around if you want to hear some modern commentary on top of Sagan’s dulcet tones.

After the second broadcast, the official Twitch channel will air a live Q&A with Ann Druyan, Cosmos co-creator and Sagan’s wife. Druyan said she’s “truly excited to share Cosmos… with the vast Twitch community.” Her husband “wanted to tear down the walls that exclude most of us from the scientific experience,” after all, “so that we could take the awesome revelations of science to heart.” She added that “the power of the original Cosmos series, with its enduring appeal to every generation since, is evidence of how much we hunger to feel our connection to the universe.”

The marathon and Druyan’s interview aren’t the only things you can look forward to. As part of Science Week, Twitch is also interviewing quite a lengthy list of prominent personalities in the field, including:

- Matthew Buffington – Director of Public Affairs at NASA’s Ames Research Center in Silicon Valley and host of NASA in Silicon Valley podcast

- Ariane Cornell — Head of Astronaut Strategy and Sales and Head of North American Sales for the New Glenn Rocket at Blue Origin

- Scott Manley — Astronomer and online gaming personality under the handle, “Szyzyg,” best known for video content about science and video games like Kerbal Space Program

- Pamela Gay — Astronomer and Principal Investigator of CosmoQuest, a citizen science facility, and the Director of Technology and Citizen Science at the Astronomical Society of the Pacific.

- Kishore Hari — Science educator and director of Bay Area Science Festival, based out of the University of California, San Francisco, best known as one of the lead organizers of the global March for Science

- Fraser Cain — Publisher of Universe Today, one of the most visited space and astronomy news websites on the internet, which he founded in 1999. He’s also the co- host of the long running Astronomy Cast podcast with Dr. Pamela Gay. Fraser is an advocate for citizen science in astronomy, and on the board of directors for Cosmoquest, which allows anyone to contribute to discoveries in space and astronomy.

- EJ_SA — Streaming on Twitch since December 2012, EJ_SA has been focused on showing viewers the seemingly magical accomplishments of past, present, and future space programs in KSP. This is where Space Shuttles fly, Rockets land, and Space Stations are built! Interactive chat, questions answered and weird facts about space all come together here!

- Phil Plait — Astronomer and science communicator. He writes the Bad Astronomy blog for Syfy Wire, was the head science writer for the new Netflix show Bill Nye Saves the World!, and is the science consultant on the science fiction mini-series Salvation coming out in the summer of 2017. He is a tireless promoter of science and lives to share his joy for the natural world.

Source: Twitch

Apple fills new hardware team with ex-Google satellite execs

Apple has hired two people with intriguing backgrounds in the field of satellite technology: John Fenwick, the former head of Google’s spacecraft business, and Michael Trela, the ex-lead of Google’s satellite engineering group. Bloomberg reports the hires, citing people familiar with the matter.

Fenwick and Trela are apparently joining a team led by Dropcam founder Greg Duffy, though there’s no concrete information about their assignments at Apple. However, there’s precedent for a nascent satellite program: Technology industry giants including Facebook, SpaceX and Google are designing drones and satellites to deliver internet to rural regions of the world.

It wouldn’t be surprising for Apple to dive into this industry, too. After all, more internet users means more potential consumers. Of course, satellite technology can also be used in imaging, another area of interest for Apple as it expands its Maps service and starts dabbling with autonomous cars.

Source: Bloomberg

Michael Kors taps your Instagram feed to beautify your smartwatch

Last month, Michael Kors announced a “My Social” app that lets you tap into your Instagram feed for watch face backgrounds. Today, the company is sharing a video that shows exactly how you’ll do that and why you might want the feature. That is, if you already own one of the Michael Kors Access watches. The app rolls out to the company’s Dylan and Bradshaw devices via an over-the-air update on April 25th. By the looks of the trailer, those smartwatches are about to get a lot better-looking.

With My Social, you can pull images from your Instagram account to turn into the background for a watch face. Sign in to your profile, pick a picture, then select a filter and watch style before displaying it as your home screen. There is no word yet on whether other social networks will be supported in the future.

Here’s the video from Michael Kors that shows some of the looks you can achieve:

Although there are ways to set photos as backgrounds for Android Wear (and 2.0) faces, these aren’t very convenient at the moment. Third-party tools like Photo Watch let you pick pictures from your own album, Google Photos or a curated list of Internet images, but don’t present many attractive watch styles. Having to go through Instagram might bother people who want to use a photo they haven’t posted, but the new tool will please Michael Kors customers who likely already share plenty of designs they like to the social network.

The company’s honorary chairman Michael Kors told Engadget, “Our watch faces are designed to do what fashion does—express your style, mirror your mood, reflect the fashion trends, and now with My Social, enable you to have a completely unique point of view.” On the face of his Dylan smartwatch, Kors currently uses a picture of his cat Bunny, whom he says “is naturally very chic.”

We’re not sure just how many people own one of Michael Kors’ connected watches at the moment, or whether the company plans to bring My Social to more devices in future. But in the meantime, don’t be surprised if you find yourself spotting Instagram-style faces on fashionistas’ wrists very soon.